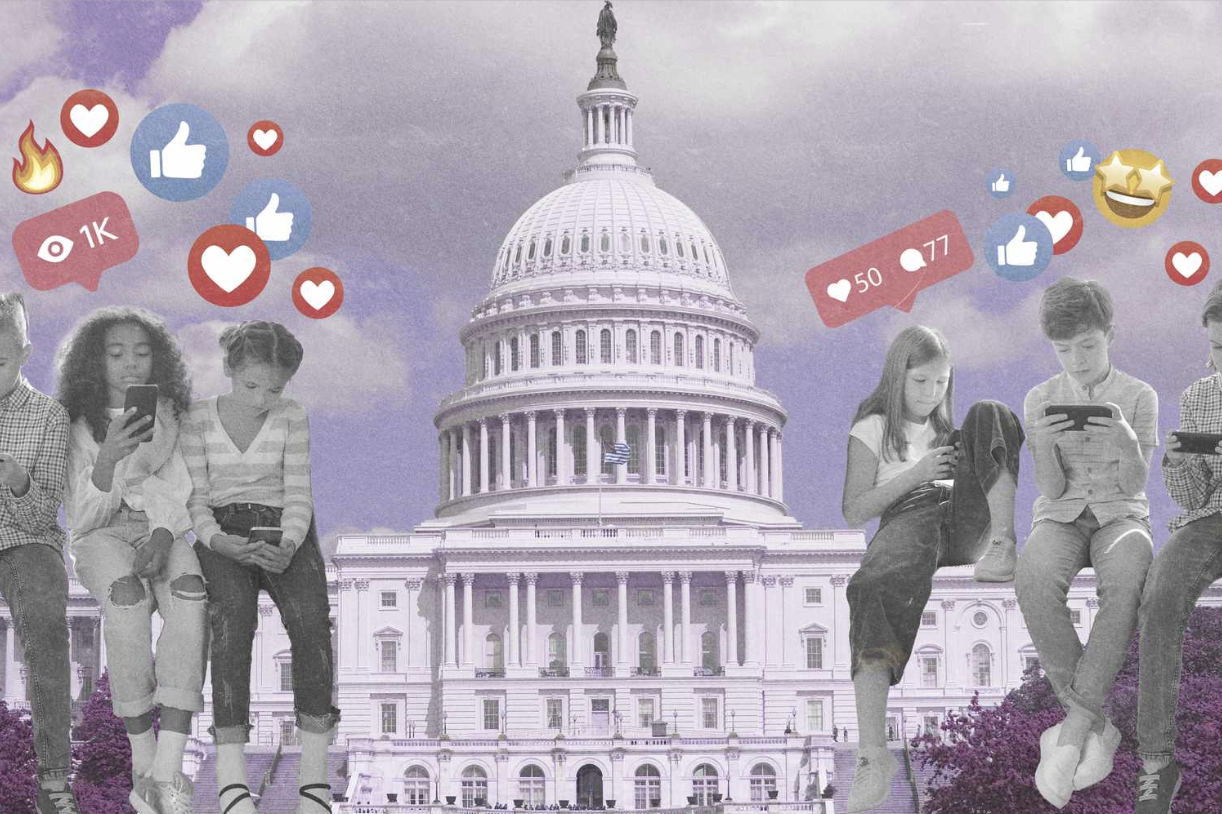

Kids Online: Can the United States Government Effectively Regulate Them?

Social media platforms such as TikTok and Instagram have revitalized media consumption, however, audiences for these videos are often younger than thirteen and particularly susceptible to harms stemming from media content. In the United Kingdom, the Information Commissioner’s Office (ICO) estimated that 1.4 million children under the age of thirteen were using TikTok despite the platform’s age 13+ sign-up policy. This recent rise in state online child safety legislation has garnered the attention of the federal government. The Kids Online Safety Act (KOSA), first introduced in 2022, aims to protect children online by restricting access to social media platforms and imposing increased surveillance. This bipartisan bill is co-authored by Senator Richard Blumenthal (D-CT) and Marsha Blackburn (R-TN) and has now surpassed the necessary 60 co-sponsors to potentially pass through the Senate. While there is a dire need to address safety concerns regarding minors online, federal regulation must be cognizant of the potential exploitations that could harm marginalized groups through censorship. KOSA is an easily justified and necessary act to protect age-based demographics as states are implementing their own online child safety regulations and the increased harm minors are susceptible to on these platforms.

According to the University of North Carolina at Chapel Hill’s “The State of State Technology Policy 2023 Report,” 23 online child safety laws were passed in thirteen states last year. This legislation is often supported by both Democrats and Republicans, these laws included limitations on social media use for minors, gave parents more access, and heightened age verification policies. This newfound push in legislation stems from ongoing debates regarding what media is appropriate for children to view and consume online. In recent years, networks such as Twitter, Instagram, Facebook, TikTok, and YouTube have served as catalysts in changing the global perception of social media by streamlining movement visibility and disseminating information at rapid speeds. However, social media’s accessibility has also led to the exposure and increased engagement of harmful behavior such as eating disorders and substance abuse. There are also several concerns regarding child exposure to predatory marketing. TikTok’s viral “Sephora kids” trend not only raises behavior concerns within Sephora, but health concerns in children utilizing products that are harsh and not made for their skin. These harmful behaviors and mass consumption from minors are a result of social media use, and now the American government is taking the initiative to take a federal approach to ensure online child safety.

Prior to the introduction of KOSA, the United States’ primary federal online child safety legislation was the Children’s Online Privacy Protection Rule (COPPA). Effective as of 2000, this law identified a child as an individual under the age thirteen and subsequently restricted a company’s ability to market to children. Additionally, COPPA included provisions that required companies to obtain verifiable consent from a parent before taking personal information from a child.

Social media platforms’ child safety policies have recently faced scrutiny from the federal government due to a perceived lack of protections in place. This past January, five major executives from Meta, Snapchat, Discord, TikTik and X underwent questioning at the Senate Judiciary Committee hearing for the ineffectiveness of their child safety policies and the role their companies had in exposing children to predatory marketing and addictive behavior. The hearing resulted in two of the chief executives, Evan Spiegel of Snapchat and Linda Yaccarino of X, pledging their support for KOSA. The other executives argued that the vague stipulations presented in KOSA would conflict with free speech because they would demand a degree of censorship. The sentiment has also been expressed by LGTBQ+ advocacy groups and digital rights groups for increased monitoring and data collection. The digital rights advocacy group, Fight for Future discussed how KOSA would allow state attorney generals to decide what online content is considered harmful and could be used to further promote state efforts to further criminalize that nation’s most controversial topics like drag performances. The concern of state attorneys having jurisdiction over harmful content was a key component to KOSA’s overall controversy. In light of these concerns, there have been several changes made to the act. One example of these changes being that, the Federal Trade Commission will enforce duty to care rather than state attorney generals. This new provision has resulted in groups like the Trevor Project and Human Rights Campaign to drop their prior opposition.

KOSA’s primary aim is to protect children online from viewing harmful content and engaging in addictive social media behavior. KOSA holds social media companies responsible for ensuring that their child safety is effective and that there are “reasonable measurements” in place so that children under the age of thirteen are not using their platforms. Additionally, KOSA will require social media platforms to elevate their privacy, security, and safety features for users under the age of eighteen. Failure to enact these “reasonable measurements” will result in companies being held accountable, liable, and potentially subject to lawsuits.

KOSA is supported by a multitude of organizations, such as the National Education Association, American Psychological Association, American Academy of Pediatrics, and American Foundation on Suicide Prevention. Although the bill has made significant progress toward passing since 2022, KOSA still requires a floor vote and the passing of a companion bill in the House, as well as a signature from the President. In light of the various hearings regarding online safety, the conversation regarding how kids can be safe online will not be going anywhere anytime soon. As the nation continues to grapple with the increased prevalence of social media, it is imperative that federal regulation address the harm it poses to minors and supplement state legislation.

Dre Boyd-Weatherly is a sophomore at Brown University concentrating in International Affairs and Public Policy. She is a staff writer for the Brown Undergraduate Law Review and can be contacted at dre_boyd-weatherly@brown.edu.

Jack Tajmajer is a senior at Brown University concentrating in Political Science and Economics. He is an editor for the Brown Undergraduate Law Review and can be contacted at jack_tajmajer@brown.edu.